In an instant

| num_rows |

|---|

| 3367004170 |

I was going to write a blog post comparing arrow-ballista and duckdb on a large task, but duckdb was so fast it was alarming. So I decided to write down some of my observations of using duckdb on the NYC Taxi Dataset.

Context

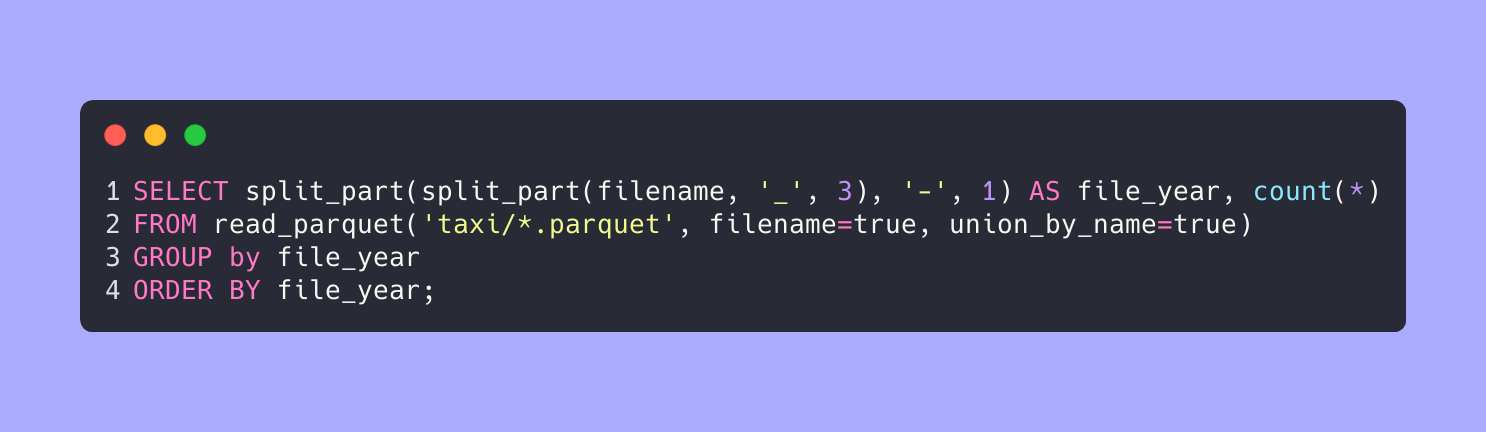

I used Duckdb v0.9.2 on a 96-core Dual Xeon workstation with ~5TB of disk space. I had good results on my 16-core workstation, too, but markedly faster results on the big workstation. There were 439 parquet files as of January 2024. I put the parquet files in one directory and used read_parquet('taxi/*.parquet', filename=true, union_by_name=true), allowing duckdb to read the files in parallel. I used the duckdb cli, and gave it a database on disk, duckdb taxidb, but read from the parquet files throughout.

Interesting Results

Messy data example

This is the record with max(total_amount). It seems like an outlier, where it should be 15.0 but there was an accidental 10000000 added in.

| fare_amount | extra | mta_tax | tip_amount | tolls_amount | total_amount | filename |

|---|---|---|---|---|---|---|

| 14.0 | 0.5 | 0.5 | 0.0 | 0.0 | 10000015.0 | taxi/yellow_tripdata_2014-08.parquet |

Trips by year

Peak trip count dropped in 2020 with Covid-19 but has increased some since.

| file_year | trip_count |

|---|---|

| 2009 | 170896055 |

| 2010 | 111529714 |

| 2011 | 176887259 |

| 2012 | 171359007 |

| 2013 | 171816340 |

| 2014 | 181284588 |

| 2015 | 228661528 |

| 2016 | 279631429 |

| 2017 | 317546944 |

| 2018 | 372645858 |

| 2019 | 368790969 |

| 2020 | 184638604 |

| 2021 | 221374980 |

| 2022 | 267424247 |

| 2023 | 142516648 |

Trips by hour of day

The # of trips varies by hour of day, but many trips didn't have this information.

| hour_of_pickup | count_star() |

|---|---|

| 0 | 50136451 |

| 1 | 36511245 |

| 2 | 26713761 |

| 3 | 19394015 |

| 4 | 14414029 |

| 5 | 13478009 |

| 6 | 29142824 |

| 7 | 49853450 |

| 8 | 61836553 |

| 9 | 63869199 |

| 10 | 63211677 |

| 11 | 65778781 |

| 12 | 69481912 |

| 13 | 69504611 |

| 14 | 72311337 |

| 15 | 69914744 |

| 16 | 62170489 |

| 17 | 72704518 |

| 18 | 85003150 |

| 19 | 85608774 |

| 20 | 79561917 |

| 21 | 78185955 |

| 22 | 74891862 |

| 23 | 64675234 |

| 1988649673 |

Thoughts on duckdb

In the past with a dataset with long strings coming from a ndjson file, I had segfaults with Duckdb. That was several minor versions of Duckdb ago. I was really impressed with the stability while throwing a huge amount of parquet at it. The difference in time it took for a query between the 16-core workstation and the 96-core workstation is exactly as you want it to be: duckdb can use all of the compute resources that you can throw at it.

Locality of compute, memory, and disk

Instead of throwing many (relatively)small computers at a problem, duckdb is a software that can use the resources of one big computer. The duckdb python package can be used for writing processes that run automatically, but I love the duckdb cli for exploring a dataset.

Go Forth and Crunch data

Try out duckdb with the knowledge that you can throw huge amounts of data at it and it will perform.